The rapid spread of generative AI is transforming corporate work environments at unprecedented speed. Tasks once confined to internal systems—such as source code summarization, design analysis, and meeting minutes—are increasingly handled through external AI services, delivering clear productivity gains. At the same time, organizations are finding it more difficult to maintain visibility and control over how far internal information travels. Much of this data movement occurs through routine, legitimate workflows, meaning potential risks often emerge only after the fact. In the generative AI era, the central question is no longer whether data moves, but how securely it does.

XCURENET is addressing this challenge through its EMASS AI and AI PLUS platforms, which continuously analyze internal data flows and view information leakage not as isolated incidents, but as the result of accumulated behavior and evolving patterns. To better understand how corporate information moves—and how leakage risks take shape—in generative AI environments, we spoke with Cha Jae-jeong, head of research at the company.

Q. What problem awareness led to the development of EMASS AI and EMASS AI PLUS?

A. EMASS AI was developed from the perspective that information leakage is rarely a sudden, isolated accident. More often, it is the outcome of small, everyday behaviors accumulating over time. Traditional security systems have been effective at responding to external threats—such as hacking attempts or malware infections—but information leakage within real corporate environments is far more complex.

Actions like sending slightly more files outside the organization than usual, increased use of email or messaging tools outside business hours, or gradually transferring materials through generative AI or cloud services can all appear to be part of normal work activity. When such behaviors are repeated, however, the likelihood of actual leakage increases significantly. In practice, we often observe cases where files are not sent all at once, but broken into smaller pieces and transmitted over several days.

Conventional security solutions tend to evaluate these actions as isolated events, failing to capture the broader behavioral flow. EMASS AI was designed not as a system that investigates causes after an incident has occurred, but as a continuous analysis framework that detects unusual patterns before they escalate into incidents.

Q. How were information leakage risks occurring in ways that existing security solutions failed to detect?

A. Most security solutions are built on the assumption of a single, clear violation of predefined rules. In real-world environments, however, risks often emerge through the accumulation of activities that appear legitimate when viewed individually—such as repeated file transfers outside business hours, routine emails containing sensitive keywords, or indirect leakage through split compression files or generative AI tools.

In cases involving insider leakage, data is frequently not sent directly outside the organization. Instead, it is first forwarded to personal email accounts—often Gmail or similar services—addressed to the individual themselves. Even when malicious intent is not explicit, particularly during the process of leaving a company, employees may perceive materials they have worked with for years as part of their personal expertise or professional capital and attempt to take them along.

These types of leakage cannot be addressed through technical controls alone. They are intertwined with organizational culture and employee awareness, presenting challenges that extend beyond conventional security measures.

Q. Should all insider data leaks be treated as malicious crimes?

A. In practice, that is not always the case. While there are certainly instances of deliberate and planned leakage, a substantial portion occurs without the individual recognizing their actions as problematic. This tendency is especially pronounced among software developers or design engineers who have worked with specific materials over long periods and come to regard them as personal know-how or portfolio assets.

Unlike external attacks, this type of perception cannot be resolved through technical blocking alone. For this reason, insider leakage should not be viewed solely as a matter of criminal response, but as an area that requires organizational-level awareness management and institutional safeguards alongside technical controls.

Q. Why has the growth of internal corporate data made “human-centered analysis” insufficient on its own?

A. The volume of messages, files, and network traffic generated daily within corporate environments has already surpassed what humans can reasonably review directly. Approaches that rely on checking a limited number of alerts or sample data make it difficult to structurally identify where risks lie across the organization or how individual user behavior is changing over time.

EMASS addresses this by clearly separating roles. Humans remain responsible for final judgment, while AI continuously observes and summarizes vast amounts of data, filtering out only the signals that genuinely require human attention. Much like a credit card system that flags an unusually large transaction after a history of small payments, EMASS detects deviations from established patterns within the broader flow of activity.

In this sense, EMASS can be understood as an intelligent black box installed within an organization’s confidential environment.

Q. What distinguishes EMASS AI from EMASS AI PLUS?

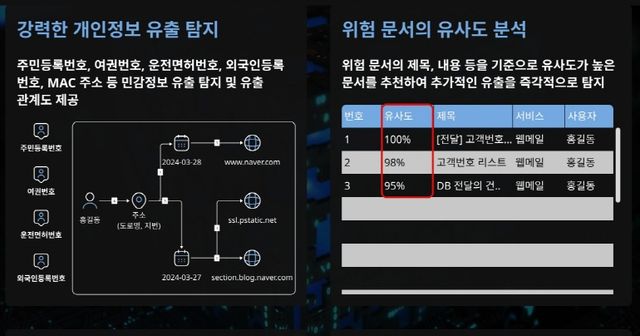

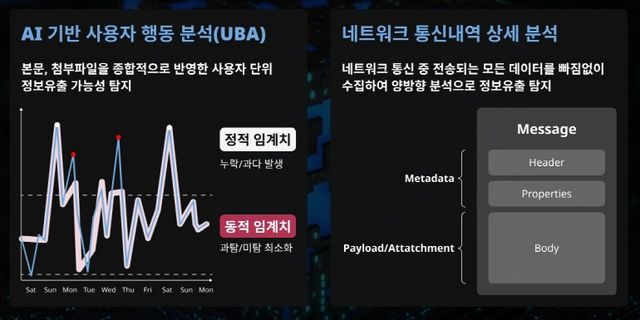

A. EMASS AI is specialized in message- and content-centric analysis. It directly records, stores, and analyzes the actual content involved in leakage, such as email bodies and attachments. EMASS AI PLUS, by contrast, is an expanded model that integrates full network packet analysis into this framework.

This expansion reflects the growing number of leakage methods that are no longer visible through content alone. Documents may be captured as images rather than sent in their original form, or transferred via encrypted messaging platforms and indirect routing paths. EMASS AI PLUS is designed to analyze packets, sessions, and content together, enabling organizations to trace which routes were used and what behavioral patterns actually occurred.

Q. How does the AI detect anomalous user behavior?

A. EMASS learns a time-series baseline of normal behavior for each user, incorporating factors such as time of activity, transmission volume, combinations of services used, and the nature of content handled. It then calculates a dynamic anomaly score based on the distance from historical patterns, the speed of change, and the concurrence of related events.

Context is also taken into account. For example, in real-world environments, it is repeatedly observed that users who show increased access to job-search or career-transition sites later become involved in internal data leakage incidents. EMASS’s AI is not designed to replace human judgment, but to provide explainable grounds for why a user’s behavior has changed, supporting informed decision-making rather than automated conclusions.

Q. As security measures become more robust, some employees may feel they are being “monitored.” How is this concern addressed?

A. That concern is very real in practice. For this reason, many companies that deploy systems like EMASS operate them alongside formal employee consent procedures and internal guidelines. It is essential to clearly explain what constitutes security-driven analysis and what types of information fall outside the scope of collection.

EMASS is not designed to label or stigmatize specific individuals, nor to replace human judgment. It functions more as a decision-support framework, providing security officers with evidence and context so they can make reasonable, informed assessments.

Q. How is the spread of generative AI changing corporate security environments?

A. Generative AI has become one of the most sensitive issues for corporate security teams today. It is now routine for employees to input source code, design blueprints, or internal meeting minutes directly into AI tools to request summaries or analysis. As these interactions accumulate, there are cases where the services begin to feel as though they “know” a company’s internal information.

At the same time, it is difficult to impose a blanket ban on generative AI usage, given its significant impact on productivity. As a result, more companies are shifting away from outright prohibition and toward managing AI use within controlled environments. This places security teams in a dilemma: allowing such services introduces risk, while blocking them outright can undermine competitiveness.

Q. In this changing environment, how is the role of security systems evolving?

A. Security systems can no longer be tools that simply generate large volumes of alerts. Instead, they must help organizations distinguish between risks they can reasonably accept and those they cannot. Treating every action as a problem is not a sustainable approach.

EMASS is designed around this principle. Its goal is to surface only those deviations within everyday activity flows that are likely to pose real issues. This enables security teams to move beyond incident response and toward a strategic role—one focused on understanding and managing how data moves across the organization.

Q. How might EMASS AI and EMASS AI PLUS reshape corporate security operations going forward?

A. They have the potential to shift the center of security from post-incident analysis to the management of early warning signs. Security teams would no longer exist solely to respond after incidents occur, but to understand organizational data flows and manage risk proactively. EMASS aims to serve as the foundational infrastructure that supports such judgment.

Generative AI has already become deeply embedded in corporate operations. The question is no longer whether to use it, but how to manage it—under what standards and from what perspective. Internal data leakage, too, has entered an era where it cannot be explained solely as isolated incidents or individual misconduct. Small changes accumulating within normal workflows can turn into risk at any moment.

XCURENET’s emphasis on “the accumulation and flow of behavior” reframes security from a reactive function to a proactive one. In the age of generative AI, corporate security is no longer about simple restriction, but about building systems that can understand and assess data flows. Depending on the choices organizations make, generative AI can become either a powerful tool for productivity—or an unmanaged source of risk.

Copyright ⓒ 시선뉴스 무단 전재 및 재배포 금지