Kioxia Corporation, a world leader in memory solutions, has successfully developed a prototype of a large-capacity, high-bandwidth flash memory module essential for large-scale artificial intelligence (AI) models. This achievement was made within the “Post-5G Information and Communication Systems Infrastructure Enhancement R&D Project (JPNP20017)” commissioned by the New Energy and Industrial Technology Development Organization (NEDO), Japan's national research and development agency. This memory module features a large capacity of five terabytes (TB) and a high bandwidth of 64 gigabytes per second (GB/s).

To address the trade-off between capacity and bandwidth that has been a challenge with DRAM-based conventional memory modules, Kioxia has developed a new module configuration utilizing daisy-chained connections with beads of flash memories. We have also developed high-speed transceiver technology enabling bandwidths of 128 gigabits per second (Gbps), along with techniques to enhance flash memory performance. These innovations have been effectively applied to both memory controllers and memory modules.

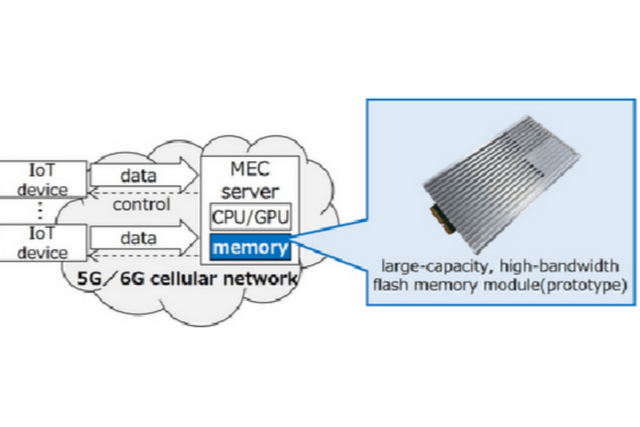

The practical application of this memory module is expected to accelerate digital transformation by enabling the adoption of Internet of Things (IoT), Big Data analysis, and advanced AI processing in post-5G/6G Mobile Edge Computing (MEC) servers and other applications.

In the post-5G/6G era, wireless networks are expected to achieve higher speeds, lower latency, and the ability to connect more devices simultaneously. However, transmitting data to remote cloud servers for processing increases latency across the entire network, including wired networks, making real-time applications difficult. For this reason, there is a need for the widespread adoption of MEC servers that process data closer to users, which is expected to drive digital transformation across a variety of industries. Furthermore, the demand for advanced AI applications, such as generative AI, has been rising in recent years. Alongside the performance improvements of MEC servers, memory modules are also required to have even larger capacity and higher bandwidth.

Against this background, Kioxia has focused on enhancing the capacity and bandwidth of memory modules using flash memory for this project. The company has succeeded in developing a prototype memory module with a capacity of 5 TB and a bandwidth of 64 GB/s, and has verified its operability.

To achieve both large-capacity and high-bandwidth memory modules, Kioxia has adopted a daisy-chain connection with beads of controllers connected to each memory board instead of a bus connection. As a result, the bandwidth is not degraded even when the number of flash memories is increased, and a large capacity beyond the conventional limit is achieved.

High-speed differential serial signaling is applied to daisy-chain connections between memory controllers instead of parallel signaling to reduce the number of connections, and PAM4 (Pulse Amplitude Modulation with 4 Levels) is utilized to achieve higher bandwidth of 128 Gbps with low-power consumption.

To shorten the read latency of flash memory in memory modules, Kioxia has developed flash prefetch technology, which minimizes the latency by pre-fetching data during sequential accesses, and implemented this in the controller. In addition, memory bandwidth has been increased to 4.0 Gbps by applying low amplitude signaling and distortion correction/suppression technology to the interface between the memory controller and flash memory.

By implementing 128 Gbps PAM4 high-speed, low-power transceivers and technologies boosting flash memory performance, Kioxia has prototyped a memory controller and memory module that uses PCIe® 6.0 (64 Gbps, 8 lanes) as the host interface to the server. The prototyped memory module demonstrated that a capacity of 5TB and a bandwidth of 64GB/s can be realized with less than 40 watts of power consumption.

In addition to IoT and big data analysis and advanced AI processing at the edge, Kioxia is promoting the early commercialization and practical implementation of the findings from this research, exploiting new market trends such as generative AI.

Copyright ⓒ AI포스트 무단 전재 및 재배포 금지

본 콘텐츠는 뉴스픽 파트너스에서 공유된 콘텐츠입니다.